A/B testing enables you to test the impact of product changes and understand how they affect your users' behaviour. For example:

- How changes to your onboarding flow affect your signup rate.

- If different designs of your app's dashboard increase user engagement and retention.

- The impact a free trial period versus money-back guarantee to determine which results in more customers.

A/B tests are also referred to as "experiments", and this is how we refer to them in the PostHog app.

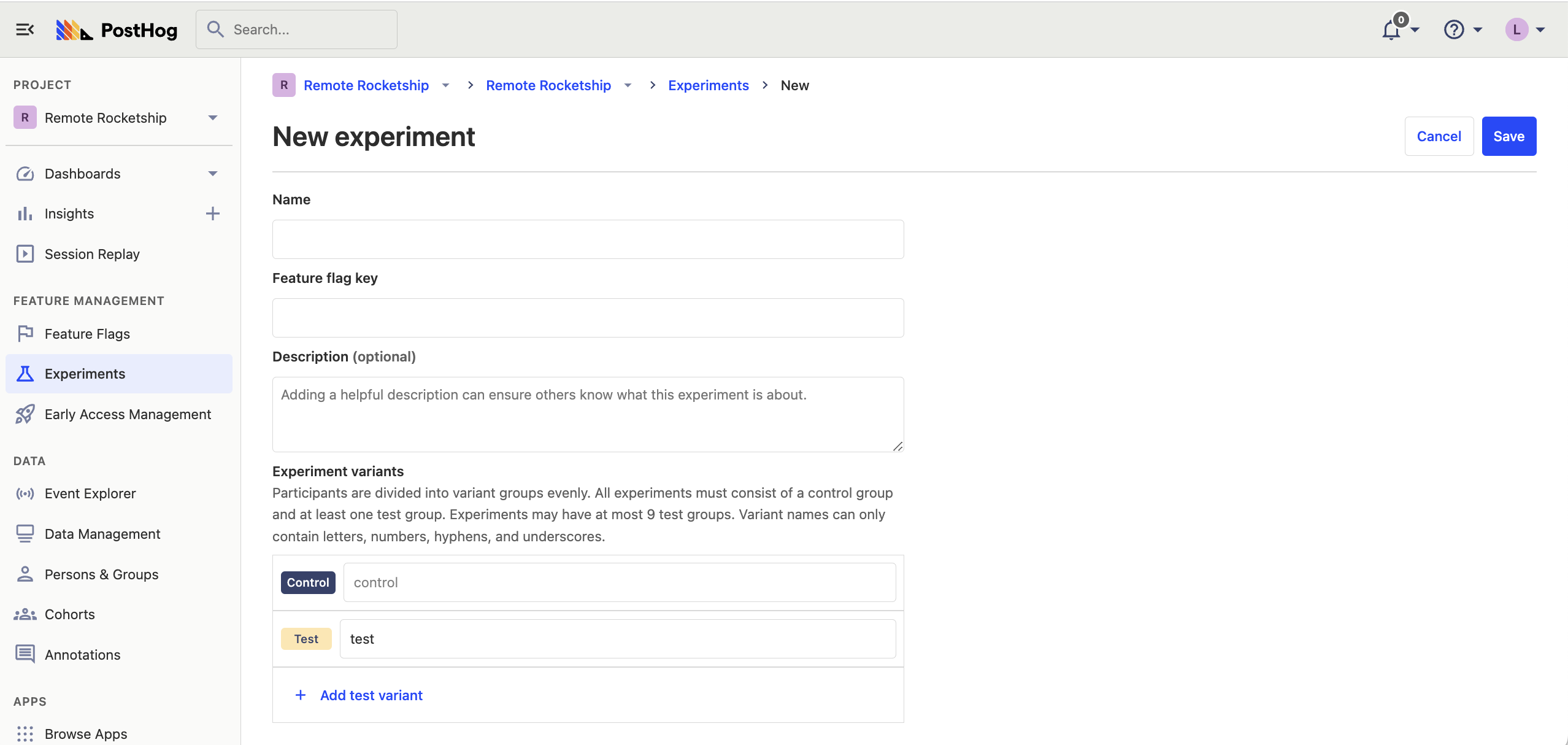

How to create an experiment in PostHog

To create a new experiment, go to the experiments tab in the PostHog app, and click on the "New experiment" button in the top right.

This presents you with a form where you can complete the details of your new experiment:

Here's a breakdown of each field in the form:

Feature flag key

Each experiment is backed by a feature flag. In your code, you use this feature flag key to check which experiment variant the user has been assigned to.

Advanced: It's possible to create experiments without using PostHog's feature flags (for example, if you you're a different library for feature flags). For more info, read our docs on implementing experiments without feature flags

Experiment variants

By default, all experiments have a 'control' and 'test' variant. If you have more variants that you'd like to test, you can use this field to add more variants and run multivariant tests. Participants are automatically split equally between variants.

Participants are automatically split equally between variants. If you want assign specific users to a specific variant, you can set up manual overrides.

Participant type

The default is users, but if you've created groups, you can run group-targeted experiments. This will test how a change affects your product at a group-level by providing the same variant to every member of a group.

Participant targeting

By default, your experiment will target 100% of participants. If you'd like to target a more specific set of participants, or change the rollout percentage, you'll need to do this by changing the release conditions for the feature flag used by your experiment.

Below is a video showing how to navigate there:

Experiment goal

Setting your goal metric enables PostHog to calculate the impact of your experiment and if your results are statistically significant. You can select between a "trend" or "conversion funnel" goal.

You set the minimum acceptable improvement below. This combines historical data from your goal metric with your desired improvement rate to show a prediction for how long you need to run your experiment to see statistical significant results.

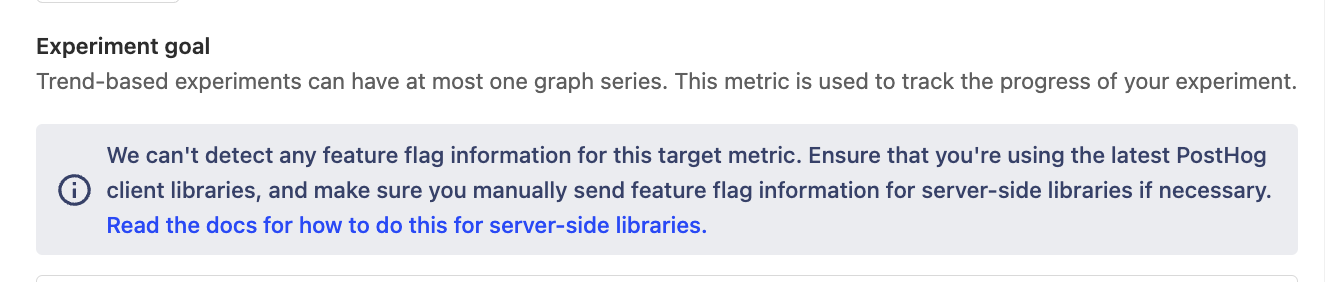

Note: If you select a server-side event, you may see a warning that no feature flag information can be detected with the event. To resolve this issue, see step 2 of adding your experiment code and how to submit feature flag information with your events.

Adding your experiment code

Step 1: Fetch the feature flag

To check which variant of your experiment to show to your participants, use the following code to fetch the feature flag:

Since feature flags are not supported yet in our Java, Rust, and Elixir SDKs, see our docs on how to run experiments without feature flags. This also applies to running experiments using our API.

Step 2 (server-side only): Add the feature flag property to your events

For any server-side events that are also goal metrics for your experiment, you need to include a property $feature/experiment_feature_flag_name: variant_name when capturing those events. This ensures that the event is attributed to the correct experiment variant (e.g., test or control).

This step is not required for events that are submitted via our client-side SDKs (e.g., JavaScript, iOS, Android, React, React Native).

Testing and launching your experiment

Once you've written your code, it's a good idea to test that each variant behaves as you'd expect. If you find out your implementation had a bug after you've launched the experiment, you lose days of effort as the experiment results can no longer be trusted.

The best way to do this is adding an optional override to your release conditions. For example, you can create an override to assign a user to the 'test' variant if their email is your own (or someone in your engineering team). You can do this by:

- Go to your experiment feature flag.

- Ensure the feature flag is enabled by checking the "Enable feature flag" box.

- Add a new condition set with the condition to

email = your_email@domain.com. Set the rollout percentage for this set to 100%. - Set the optional override for the variant you'd like to assign these users to.

- Click "Save".

Once you're satisfied, you're ready to launch your experiment.

Note: The feature flag is activated only when you launch the experiment, or if you've manually checked the "Enable feature flag" box.

Note: While the PostHog toolbar enables you to toggle feature flags on and off, this only works for active feature flags and won't work for your experiment feature flag while it is still in draft mode.

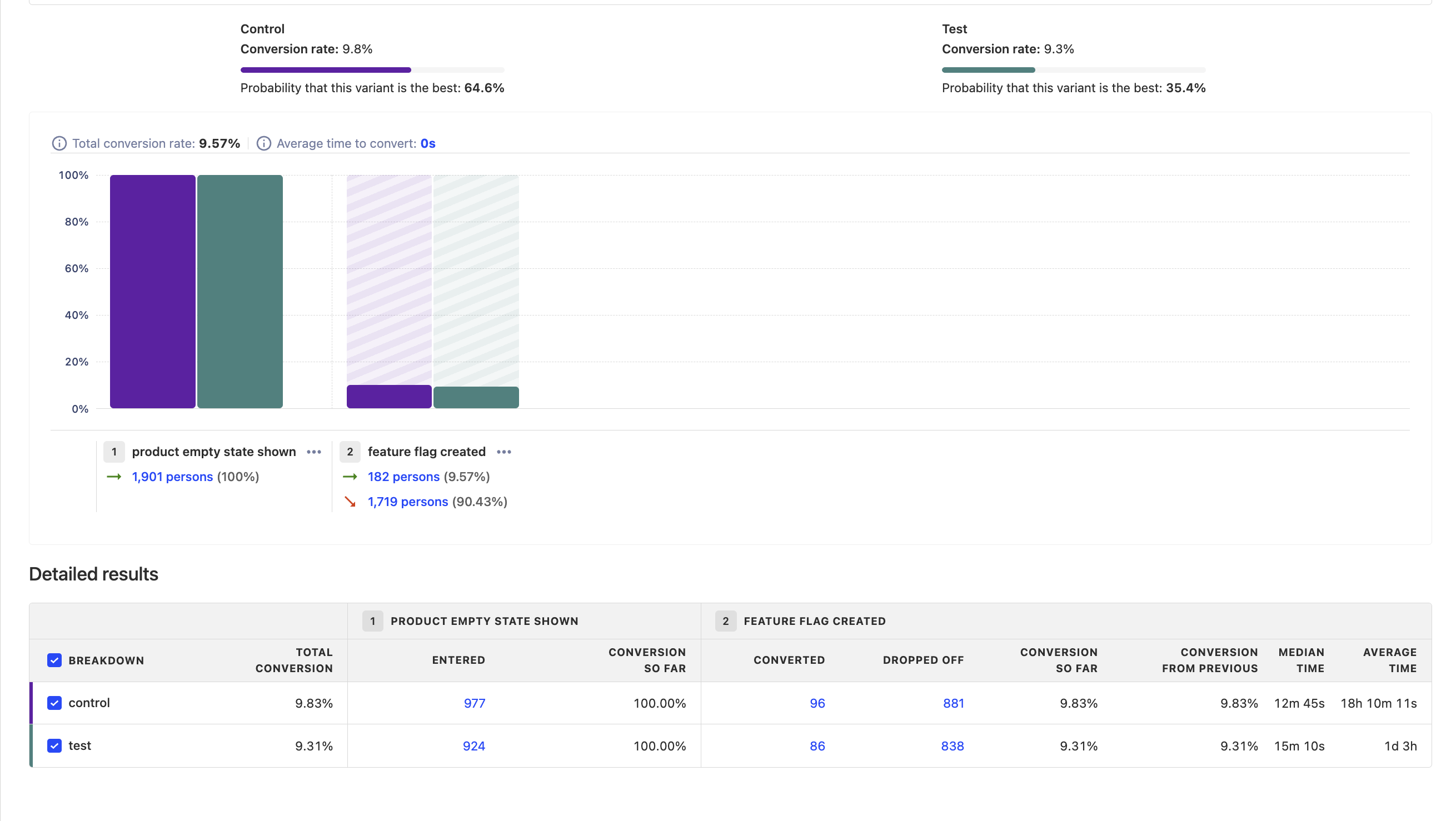

Viewing experiment results

While the experiment is running, you can see results on the page:

Sometimes, in the beginning of an experiment, results can be skewed to one side while enough data is being still gathered. While peeking at preliminary results is not a problem, making quick decisions based on them is problematic. We advise you to wait until your experiment has had a chance to gather enough data.

Ending an experiment

Here is checklist of things to do when ready to end your experiment:

- Click on "Stop" button on the experiment page. This will ensure final results are stored.

- Roll out the winning variant of your experiment by setting the feature flag conditions accordingly. This ensures that your users see the winning variant without needing to make any code changes.

- Remove the experiment and losing variant's code to launch the winning variant to all your users.

- Share results with your team.

- Document conclusions and findings in the "description" field your PostHog experiment. This will help preserve historical context for future team members.

- Archive the experiment.

Further reading

Want to learn more about how to run successful experiments in PostHog? Try these tutorials: